Someone Put Claude in a Bash Loop Called Ralph Wiggum. It Changed How I Build Software.

A one-line bash script called Ralph Wiggum fixed context rot by restarting Claude in a loop. Then Anthropic broke it. That failure led me to subagents and changed how I build software.

Last post I told you your AI gets dumber the longer you use it. This post is about the guy who fixed it with a while loop.

The One-Line Fix

An Australian developer named Geoffrey Huntley put Claude Code in a bash loop. The entire script was one line:

while :; do cat PROMPT.md | claude ; doneFeed Claude a prompt. Let it work. Restart. Repeat. Forever.

He called it Ralph Wiggum, after the Simpsons character known for being ignorant, persistent, and optimistic. The loop just keeps going no matter what, like Ralph cheerfully walking into situations he doesn’t understand.

Y Combinator teams started using it. It went viral. Even Boris Cherny, the creator of Claude Code, said he uses it.

It was a simple-looking approach, comically simple. It also changed everything about how I use AI to build software.

Why the Claude Code Workflow Worked

If you read the context rot post, you already know the problem. Long sessions degrade AI output. The context window fills up, the AI starts forgetting constraints, repeating rejected ideas, drifting off track.

Everyone noticed this. Most people just complained about it. Geoffrey did something about it.

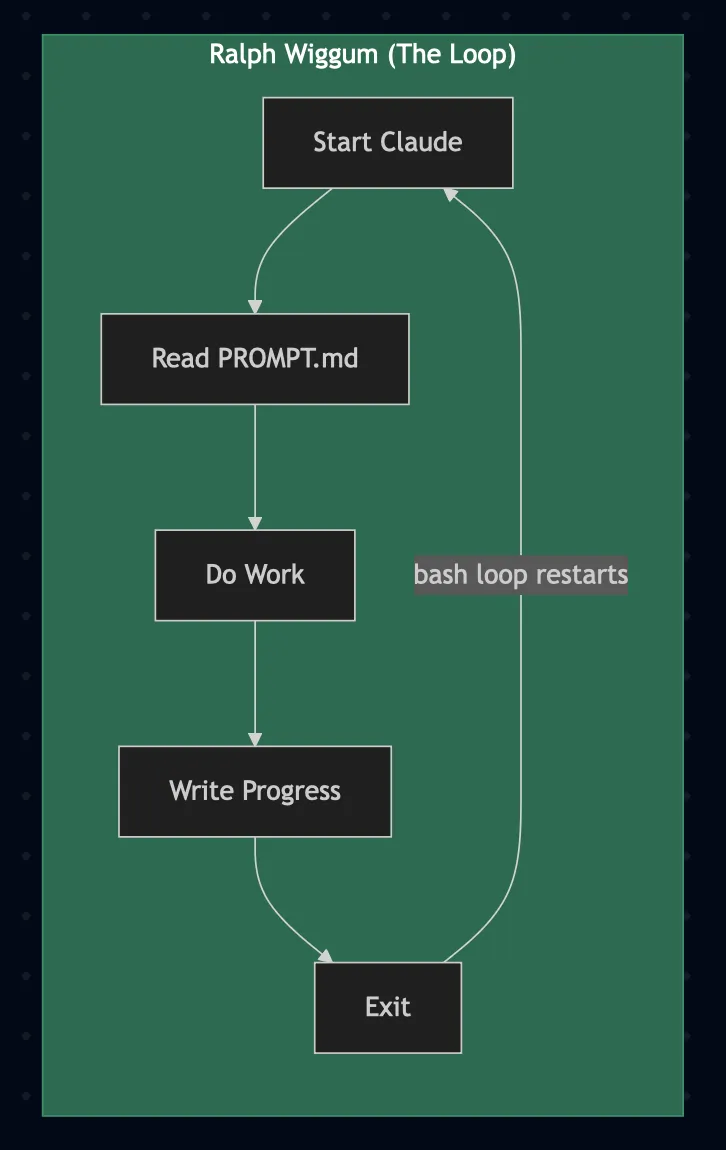

Ralph Wiggum’s philosophy was simple: iteration over perfection. Failures are data. Just keep looping. State lived in a PROMPT.md file. Every restart, Claude read the file, picked up where it left off, did more work, wrote progress back. Then the bash loop killed it and started it fresh.

The cycle looked like this:

The Ralph Wiggum loop. Dumb. Effective.

The Ralph Wiggum loop. Dumb. Effective.

It looked hacky as hell. A bash while-loop babysitting an AI. But the people using it were shipping faster than anyone else. The AI wasn’t smarter. It just never got dumb. Fresh context every cycle. No rot.

Then Anthropic Made It “Better”

Anthropic saw the pattern and built it into Claude Code as an official plugin.

But their version didn’t restart. It kept the session alive.

On paper, that sounds like an improvement. No restart overhead. Continuous flow. The AI just keeps working.

In practice? It was a context rot factory.

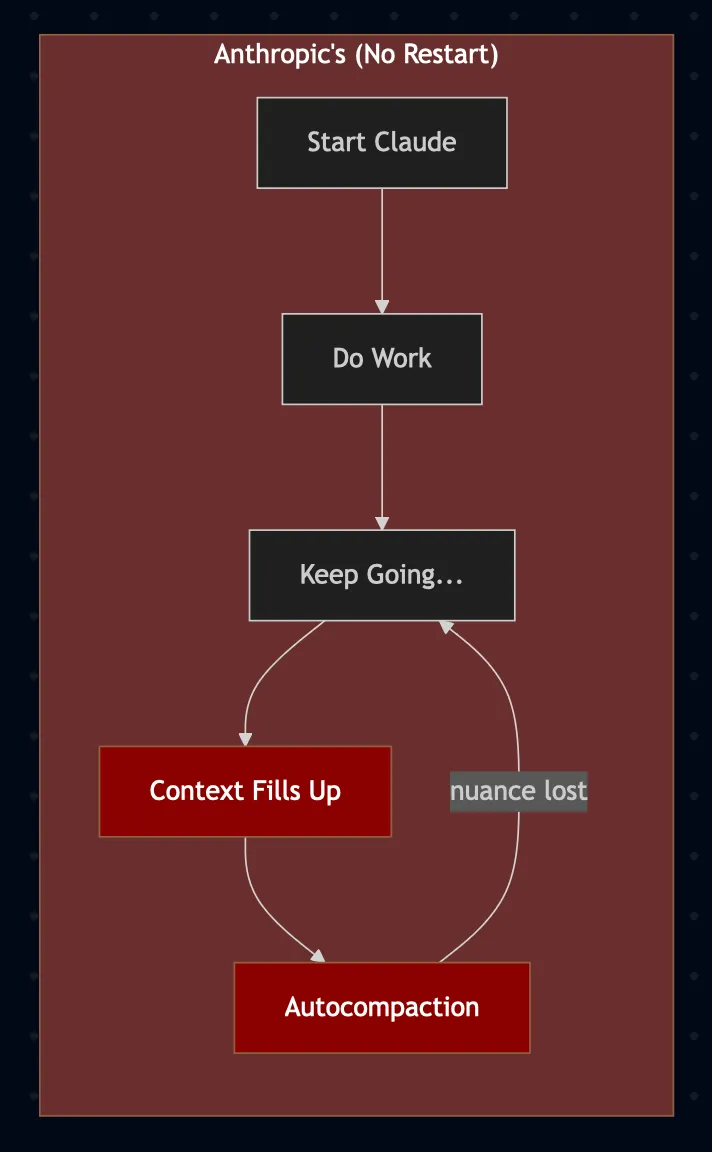

Anthropic’s “improved” version. Spot the problem.

Anthropic’s “improved” version. Spot the problem.

The whole point of Ralph Wiggum was the restart. Fresh context every cycle. Remove the restart and you’re back to the exact same degradation problem I wrote about last time. The context window fills up. Autocompaction kicks in. Nuance gets flattened. The AI drifts.

I was using it for real work and watching the quality degrade in real time between compactions. Long tasks would start strong and end with the AI suggesting things I’d already rejected three compaction cycles ago.

They took the hack and made it “better” by removing the part that made it work. The restart wasn’t a bug. It was the entire feature.

The Click

Watching the official version struggle made something click for me.

The restart wasn’t really about restarting. It was about scoping.

Each cycle of Ralph Wiggum was really doing this: give a fresh worker one task, let them do it, collect the result. The restart was just a crude way to enforce scope. Kill the context, start clean, do one thing.

So what if instead of restarting the same agent over and over, you spawned a new one for each task?

Not a loop. An orchestrator.

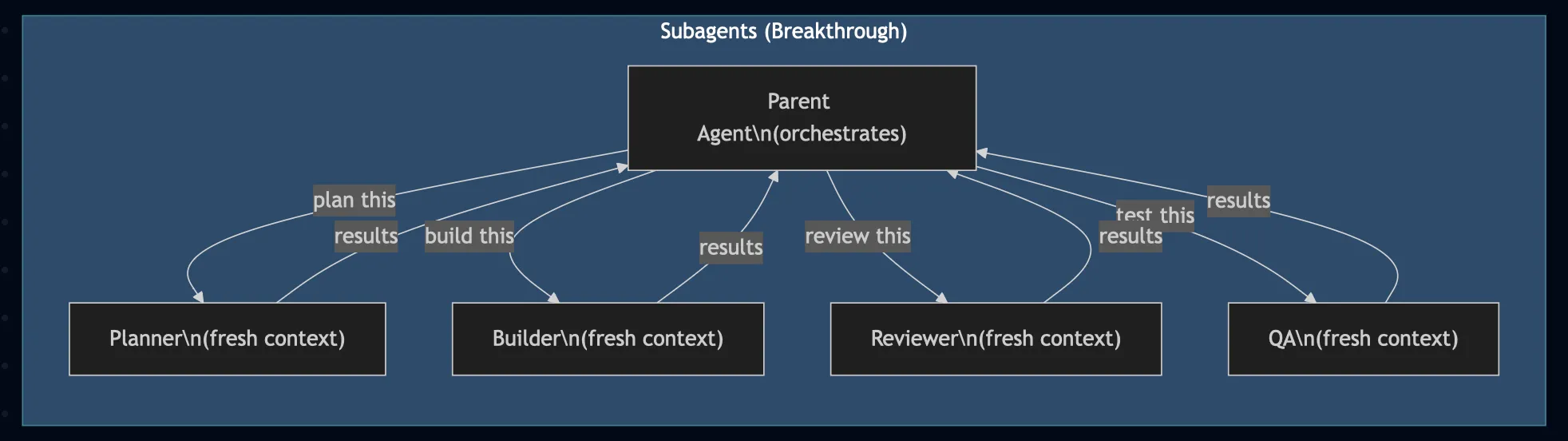

A parent agent that holds the big picture. Child agents, subagents, that each get one scoped task, fresh context, and nothing else. They execute, return results, and the parent integrates.

The parent never gets polluted by the children’s work. Each child starts clean. The mess stays contained.

Ralph Wiggum was doing manual orchestration with a bash loop. Subagents do the same thing, but intentionally. And they don’t share context with each other. That’s the point.

Subagents. Fresh workers, scoped tasks, clean handoffs.

Subagents. Fresh workers, scoped tasks, clean handoffs.

Why Claude Code Subagents Change Everything

I went from fighting context rot in long sessions to never experiencing it at all.

Every task gets a fresh agent with exactly the context it needs. A planning task gets the PRD and the codebase structure. A building task gets the relevant files and the spec for one story. A review task gets the diff and the requirements. Nothing more.

Fresh context means better reasoning. Scoped work means no tangents. Results pass back to the parent, not the mess that produced them.

My output quality jumped. Not a little. Dramatically. Tasks that used to take a full session of fighting bad suggestions were getting done in one shot by a subagent with clean context. I was shipping features faster than I ever had, and the code quality was higher because each agent was focused on one thing instead of juggling an entire session’s worth of accumulated garbage.

You can even run tasks in parallel. Planning one feature while building another while reviewing a third. They don’t interfere with each other because they don’t share context. The parent orchestrates, the children execute.

What a Claude Code Multi-Agent Workflow Looks Like

Here’s a real workflow. I want to add a feature.

Planning agent: “Here’s the PRD. Break it into implementation tasks.” Fresh context. Just the PRD and the codebase structure. It comes back with a plan.

Building agent: “Here’s task one. Implement it.” Fresh context. Just the relevant files and the task spec. It writes the code.

Review agent: “Here’s what changed. Review it against the requirements.” Fresh context. Just the diff and the spec. It catches issues.

QA agent: “Here’s the feature. Write tests and verify it works.” Fresh context. Just the feature code and the acceptance criteria. It validates.

Each one starts clean. Each one does one job well. The parent orchestrates: plan → build → review → test → iterate.

The Bigger Picture

This pattern should look familiar.

No one person on a dev team holds all context. Specialists do focused work within their domain. A lead orchestrates and integrates the pieces. Nobody invites the entire company to every meeting.

I was trying to hack around context limits. I accidentally arrived at the same structure that software teams have used for decades. Fresh workers with scoped responsibilities, coordinated by someone with the big picture.

Once I saw it, I couldn’t unsee it. And I started wondering: what if you leaned into that structure intentionally? What if you took everything you knew about running engineering teams, the roles, the processes, the handoffs, and applied it to AI?

I wasn’t building a hack anymore. I was building toward something bigger.

Ralph Wiggum was a bash loop someone built to work around a problem. Anthropic tried to formalize it and accidentally broke the thing that made it work. That failure pushed me to subagents. And subagents turned out to be the real breakthrough.

Fresh workers. Scoped tasks. Clean handoffs. A parent that orchestrates without getting buried in the details.

Sound familiar? It should. It’s how every good engineering team works.

Next up: If AI agents work like team members, maybe we should give them the same structure we give humans. The same ceremonies, the same briefings, the same process we’ve been refining for twenty+ years.